Apple investigating augmented reality for improved iPhone Maps

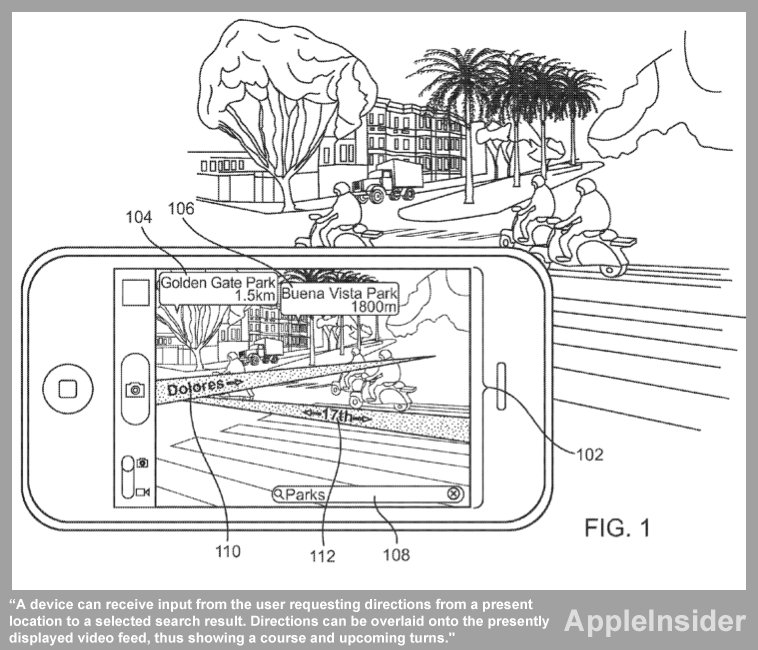

Apple's interest in the feature was revealed this week in a new U.S. Patent and Trademark Office filing entitled Augmented Reality Maps. Using an iPhone camera and its wide array of sensors, including GPS, compass and gyroscope, mapping data can be used to visually augment live video.

This process, known as "augmented reality," is already found in many iPhone applications, such as Layar (iTunes link), which can be used for finding local businesses and other locations. Augmented reality presents data to a user in real time by augmenting images of the real world that are displayed via a device's camera.

In Apple's concept, streets, locations and other map data would be overlaid onto the live images being displayed through the iPhone camera. Standing along a street, users could see the street name and individual street addresses displayed in front of them, just by holding up their iPhone and pointing its camera at a location.

Apple's application notes that augmented reality programs are already available, but are typically separate from mapping applications that offer users directions to a location.

"Such systems can fail to orient [users] with a poor sense of direction and force the user to correlate the directions with objects in reality," the filing reads. "Such a transition is not as easy as it might seem.

"For example, an instruction that directs a user to go north on Main St. assumes that the user can discern which direction is north. Further, in some instances, street signs may be missing or indecipherable, making it difficult for the user to find the directed route."

Apple's solution would interpret data describing the surrounding areas, and determine what objects are being viewed by the iPhone at present. This information would be overlaid onto the live video screen, and other features, like searching for locations, would also be accessible from this screen.

"In one form of interaction, a device can receive input from the user requesting directions from a present location to a selected search result," the filing reads. "Directions can be overlaid onto the presently displayed video feed, thus showing a course and upcoming turns."

The system could also give users indications that they are headed in the wrong direction. For example, if they must walk north to find a certain restaurant, and they are headed south, the system could inform them there is "no route" to their selected destination.

The proposed invention made public this week was first filed in February of 2010. It is credited to Jaron Waldman.

Its publication comes just a week after another potential enhancement to the iPhone Maps application was also discovered by AppleInsider. That filing described a system that would exaggerate or even omit some details, like roads or landmarks, to make navigation easier to follow.

Since the release of iOS 3.2 in April 2010, Apple has been relying on its own databases for location-based services. The company has also revealed it is working on a "crowd-sourced traffic" service that will launch in the next few years.

The company also purchased Google Maps competitor Placebase in 2009, and in 2010 it acquired another online mapping company, Poly9. The company's interest in "radically" improving the iPhone Maps application has also been highlighted in job listings advertised by Apple.

Neil Hughes

Neil Hughes

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

William Gallagher

William Gallagher