Apple exploring iPads with translucent, synchronized displays for augmented reality

Augmented reality (AR) is a term used to describe a live direct or an indirect view of a physical, real-world environment whose elements are augmented by computer-generated sensory input, such as annotations, sound or graphics presented through an information layer.

In one filing published for the first time Thursday by the United States Patent and Trademark Office and discovered by AppleInsider, Apple notes that despite strong academic and commercial interest in (AR) systems, many existing implementations are complex and expensive, making them unsuitable for general use by the average consumer.

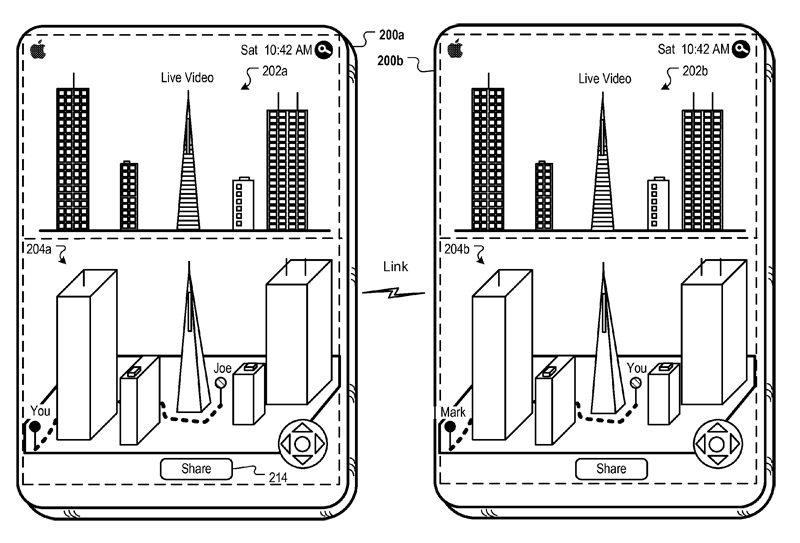

As part of its filing, Apple proposes that iPads could feature a split screen display that can be used to display an object or subject matter such as a live video feed from the tablet's camera on one side of the split, and computer-generated imagery identifying elements in that video feed on the second side of the split.

In one example shown, a user is viewing a live video of the skyline of downtown San Francisco in first display area, while object recognition is performed in real-time on a captured frame of the video to present information on the second display area, such as balloon call outs identifying the buildings or structures in the live video.

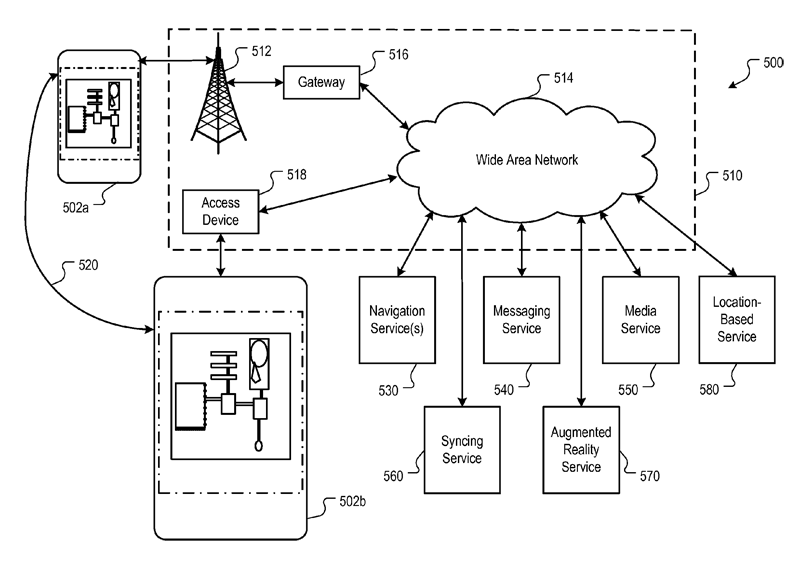

Software running on the iPad could utilize onboard positioning system such as GPS, WiFi, and Cell ID ,along with motion sensors to transmit information about the live feed to a network service, which could then spit back 3D models of recognized objects in the live feed that the user could navigate in real-time.

"For example, gyroscopes, magnetometers and other motion sensors can provide angular displacements, angular rates and magnetic readings with respect to a reference coordinate frame, and that data can be used by a real-time onboard rendering engine to generate 3D imagery of downtown San Francisco," Apple said. "If the user physically moves device, resulting in a change of the video camera view, the information layer and computer-generated imagery can be updated accordingly using the sensor data."

More specifically, the company provided an example where the user sets a marker via a pushpin on a building seen in the distance so that a driving or walking route can be computed in real-time and overlaid on the 3D computer-generated imagery to provide directions to that building from the user's current location.

Optionally, Apple added that the annotated live video, computer-generated imagery and resulting information layer can be shared via a Share Button with one or more other devices, and the AR displays of the devices can be synchronized to account for changes in video views.

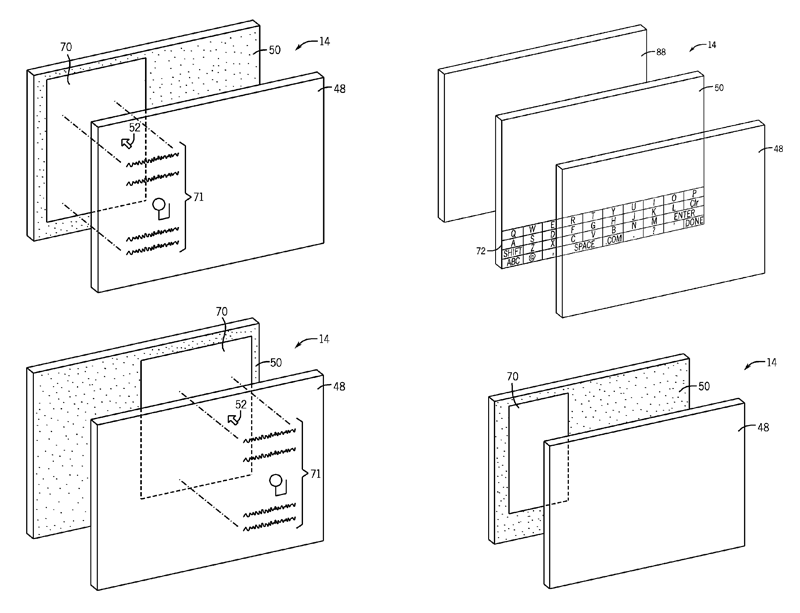

While the filing proposes an AR implementation utilizing existing technologies found on the iPad (and iPhone), a second filing from the company relating to the same subject matter is slightly more ambitious in that it proposes iPads with a display screen having a viewing area with a transparent portion that enables a user to view objects behind the electronic device by looking through the display screen.

The "transparent portion may encompass the entire viewing area, or only a portion of the viewing area of the display," wrote Apple engineer Aleksandar Pance, who is the sole inventor credited to the patent application titled "Transparent Electronic Device." He added that additional implementations could call for an iPad with two or more display screens (each having respective viewing areas with transparent portions) arranged in an overlaid or back-to-back manner, or two display screens whereby one display screen is partially opaque but displays a transparent window that's movable via multitouch input from the user.

"These overlays whether in handheld or other electronic devices, may provide an 'augmented reality' interface in which the overlays virtually interact with real-world objects," Pance wrote. "For example, the overlays may be transmitted onto a display screen that overlays a museum exhibit, such as a painting. The overlay may include information relating to the painting that may be useful or interesting to viewers of the exhibit. Additionally, overlays may be utilized on displays in front of, for example, landmarks, historic sites, or other scenic locations. […] For example, a tour bus may include one or more displays as windows for users. These displays may present overlays that impart information about locations viewable from the bus.

The same filing also details a viewing routine that would allow a user to view both images and real world events simultaneously via a single display screen. For example, the viewing routine may allow for a portion of the display to be selectively transparent while the remainder of the area of the display screen is opaque.

"For example, display screen may include an in-plane switching LCD screen in which pixels of the screen default to an 'off' state that inhibits light transmission through the screen," Pance explained. "This may be accomplished by driving a voltage to zero to the pixels in an 'off' state (i.e., the pixels in an opaque region)" while "voltage could then be applied to pixels of the display screen to enable light transmission through such pixels (when desired), allowing a user to view real-world objects through the activated pixels of display screen, thus generating a window in the opaque region."

Apple said such futuristic displays screens may include an LCD having pixels that default to an "on" state allowing light transmission and which can be activated to render some or all of the pixels opaque. Alternatively, the company said that the screens could include an OLED display that may selectively deactivate pixels to form a window where the display screen may output information over any image generated by the display screen, over any real-world object viewable by a user through the window, or both.

Sam Oliver

Sam Oliver

Stephen Silver

Stephen Silver

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele

14 Comments

The special window on the tour bus is a good idea. Not sure people will use this in everyday life since they typically know their city anyway, and go to the same places.

Imagine the iAd potential for a thing like this though! You see someone walking down the street and you like their shoes and the iPad recognises them and even plots you a route to the nearest shop.

The transparent display won't work unless Apple figures out somewhere else to store the iPad's components besides behind the screen.

In the future, we won't even have to worry about displays anymore. They'll be on contact lenses.

In the future, we won't even have to worry about displays anymore. They'll be on contact lenses.

How long before we have iPads that hover?

Sounds like a patent getting around Google Street View, and going their own way for a mapping solution including AR...