Apple researching autostereoscopic 3-D display hardware

Apple has been conducting research on a new breed of display hardware that would employ autostereoscopy to produce three-dimensional images that can be viewed by multiple users without the need for special headgear or glasses, AppleInsider has discovered.

"Modern three-dimensional (3D) display technologies are increasingly popular and practical not only in computer graphics, but in other diverse environments and technologies as well," Apple said in the 25-page filing. "Growing examples include medical diagnostics, flight simulation, air traffic control, battlefield simulation, weather diagnostics, entertainment, advertising, education, animation, virtual reality, robotics, biomechanical studies, scientific visualization, and so forth."

While common forms of such displays require shuttered or passively polarized eyewear, those approaches have not met with widespread acceptance because observers generally do not like to wear equipment over their eyes, the company said. Such approaches are also said to be impractical, and essentially unworkable, for projecting a 3D image to one or more casual passersby, to a group of collaborators, or to an entire audience such as when individuated projections are desired.

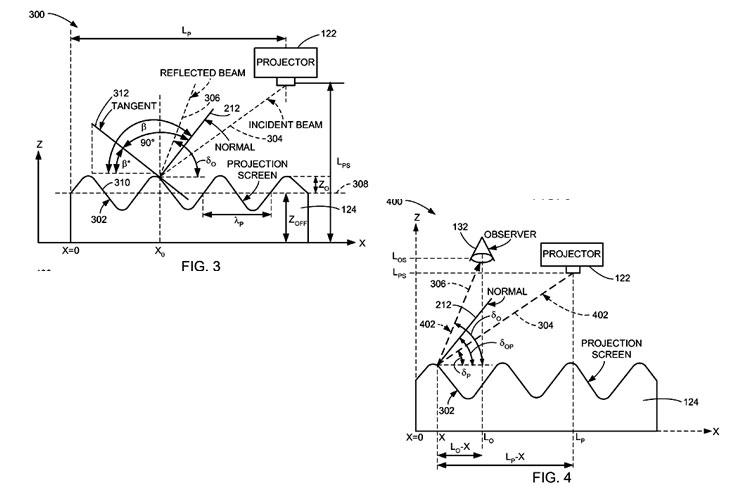

As a result, Apple proposes a three-dimensional display system having a projection screen with a predetermined angularly-responsive reflective surface function. Three-dimensional images would be respectively modulated in coordination with the predetermined angularly-responsive reflective surface function to define a programmable mirror with a programmable deflection angle.

This form of technology would cater to the continuing need for such practical autostereoscopic 3D displays that can also accommodate multiple viewers independently and simultaneously, the company said. Unlike 3D glasses or googles, it would provide simultaneous viewing in which each viewer could be presented with a uniquely customized autostereoscopic 3D image that could be entirely different from that being viewed simultaneously by any of the other viewers present, all within the same viewing environment, and all with complete freedom of movement.

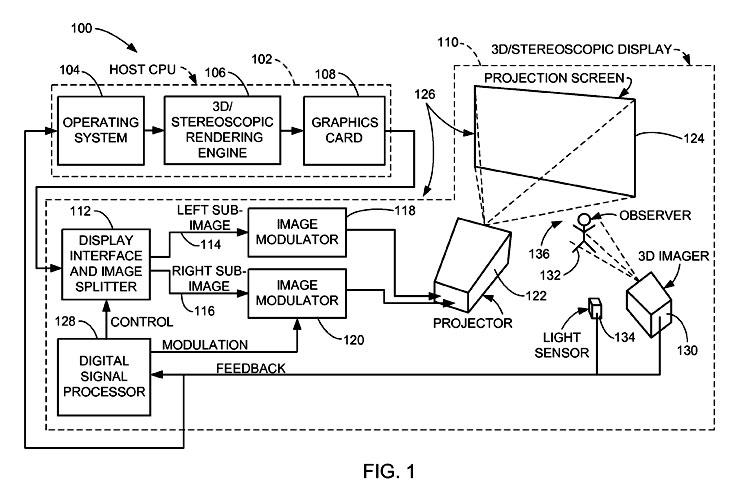

According to the filing, this form of display could include a 3D/stereoscopic rendering engine that renders 3D images and may be implemented in firmware, software, or hardware. The 3D/stereoscopic rendering engine could also be part of a graphics card, code running on a graphics chip's graphics processor unit, a dedicated application specific integrated circuit, specific code running on the host CPU, and so forth.

"The 3D images that are rendered by the 3D/stereoscopic rendering engine are sent to a 3D/stereoscopic display through a suitable interconnect, such as an interconnect based upon the digital video interface (DVI) standard," Apple said. "The interconnect may be either wireless (e.g., using an 802.11x Wi-Fi standard, ultra wideband (UWB), or other suitable protocol), or wired (e.g., transmitted either in analog form, or digitally such as by transition minimized differential signaling (TMDS) or low voltage differential signaling (LVDS))."

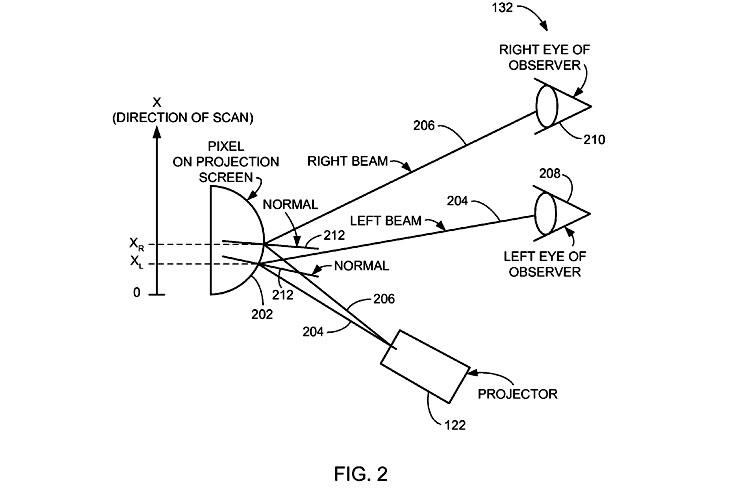

A display interface and image splitter inside the 3D/stereoscopic display would divide the 3D images from the 3D/stereoscopic rendering engine into two 3D sub-images, namely a left sub-image and a right sub-image. The left and right sub-images would be modulated (including being turned on and off) in respective image modulators to enable and control optical projection by a projector of the left and right sub-images respectively into the observer's left and right eyes

"The observer's brain then combines the two projected optical sub-images into a 3D image to provide a 3D viewing experience for the observer," the filing explains. "The deflection into the observer's respective left and right eyes is accomplished using a projection screen. The projection screen, in combination with image data properly modulated [...] forms a mirror device that is a programmable mirror with a programmable deflection angle."

Broadly speaking, Apple said, this combination constitutes the projection screen as a programmable mirror that is a spatial filter, because the combination operates to cause light to reflect from the projection screen to the observer's particular left and right eyes as a function of the spatial locations of those respective eyes, and otherwise does not reflect light — as if the light were filtered out.

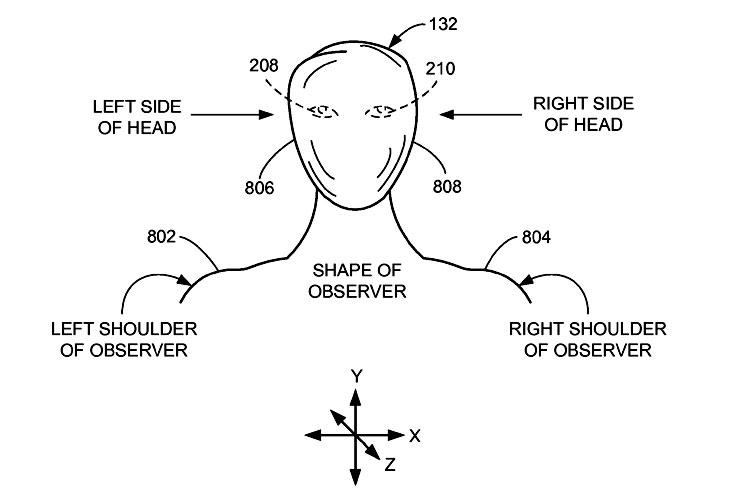

A digital signal processor (DSP) in combination with a 3D imager would also determine the correct location of an observer with respect to the projection screen. Characteristics about the observer, such as the observer's head position, head tilt, and eye separation distance with respect to the projection screen would also be determined by the DSP and the imager.

"The 3D imager may be any suitable scanner or other known device for locating and determining the positions and characteristics of each observer," the company went on to say. "Such characteristics may include, for example, the heights of the observers, head orientations (rotation and tilt), arm and hand positions, and so forth."

In some embodiments, the 3D imager may be configured as an integral part of the projector, which could be configured to directly illuminate the observer as well as the projection screen. An appropriately located light sensor would then be positioned to pick up the illumination light that is reflected from the observer, determining his or her position relative to the display.

Apple added that the 3D imager and the light sensor could also provide a means for observer input: "For example, the volume in front of the projection screen in which the observer is positioned may be constituted by the 3D display system as a virtual display volume that is echoed as a 3D display on the projection screen. The virtual display volume can then be used for observer input. In one embodiment, the observer can then actuate, for example, a 3D representation of a button to activate certain features on a virtual active desktop. Such an active desktop would be represented virtually in the virtual display volume and, by virtue of the 3D projection on the projection screen, would appear to the observer as a 3D image in the virtual display volume in the immediate presence and proximity of the observer. Other human interface behaviors are similarly possible, as will be understood by persons of ordinary skill in the art in view of the present disclosure."

In concluding its filing, originally submitted back in September of 2006, the Cupertino-based company asserts that such display technology is "straight-forward, cost-effective, uncomplicated, highly versatile and effective, can be surprisingly and unobviously implemented by adapting known technologies, and are thus fully compatible with conventional manufacturing processes and technologies."

AppleInsider Staff

AppleInsider Staff

Mike Wuerthele

Mike Wuerthele

Malcolm Owen

Malcolm Owen

Chip Loder

Chip Loder

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Michael Stroup

Michael Stroup